SAFE AI Policy

At SAFE Security, we are committed to ensuring transparency, security, and reliability in the use of Artificial Intelligence (AI) and Machine Learning (ML) technologies across our products and services. This FAQ provides clear answers to common questions about how AI is integrated into our offerings, how we manage and protect customer data, uphold privacy standards, and ensure transparency on the use of AI and ML models.

Updated 2025-06-06

How does AI work in SAFE Products?

SAFE leverages a combination of open-source, self-hosted models, and third-party hosted models to deliver an AI powered experience tailored to you, your teams, and your workflows. AI within SAFE is used in three primary ways:

- Automated processing of uploaded information to ingest your information in the SAFE Platform;

- Agentic AI agents to optimise your workflows; and

- Generative AI to provide insight to your data and assist you in using the SAFE platform

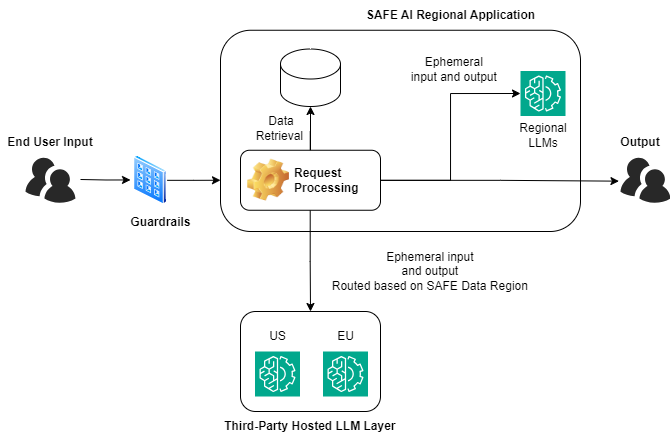

The high level data flow is shown below:

Neither SAFE nor the Large Language Model (LLM) providers use your data for training purposes. Prompts are stored by SAFE for usage history, limited to the user initiating the query. This approach ensures the privacy of your data is maintained throughout the entire process.

How does SAFE use customer data?

SAFE processes user inputs solely to generate the outputs the user has requested. We may also process organizational data from within your tenant that the user has permission to view and include that data with the user inputs so that LLMs can provide more accurate, relevant, and contextual responses.

The LLM providers we use do not retain your inputs and outputs, or use them to improve their services.

In addition to the restrictive policies we have put in place for our LLM providers, we also limit the use and access of customer data within our platform. Customer inputs and outputs are used only to serve and improve individual customer experiences. They are not used for model training across customers.

SAFE may store your inputs and outputs for a limited period of time to reduce latency (e.g. when displaying a page summary), or when required to provide a specific feature (e.g. displaying a search history).

Customers are responsible for ensuring that any personal data submitted through SAFE AI complies with applicable data protection laws and that proper consent has been obtained from data subjects where required.

Which LLMs are being used?

We use a diverse range of open-source, AWS Bedrock hosting LLMs, including models from the Anthropic Claude Sonnet and Nova models, alongside third-party hosted LLMs from OpenAI's GPT series of models, to deliver the best outcomes for customers. Our features use dynamic routing to select the appropriate mix of models that can deliver the best experience and accuracy.

The LLM providers we use do not retain your inputs and outputs, or use them to improve their services.

Customers can request our list of data sub-processors for more information on our third-party hosted LLM providers. While SAFE uses contractual safeguards with its LLM providers, including DPAs, customers acknowledge that these providers operate their own infrastructure, and SAFE does not have full operational control over their internal compliance practice.

Do any of the LLM providers store customer data?

No, the LLM providers we use do not retain your inputs and outputs, or use them to improve their services.

Does SAFE share any data with Third Parties?

The data user submit and the responses users receive via AI features in the SAFE platform are not used to train, fine-tune or improve any LLM models or services. For third-party hosted models, each data request is sent to the third-party provider individually over an TLS encrypted service, to process and send back to SAFE. Other than prompts, data is not persisted.

Customer prompts are stored by SAFE for individual user history, analysis and abuse prevention. Customers can request prompt removal by contacting SAFE support.

Is it possible to limit the LLM providers used by the SAFE Platform?

No, SAFE leverages dynamic routing between models, and does not support provider selection.

Is SAFE using my inputs and outputs or customer data to train SAFE products and services?

No, SAFE does not use your inputs, outputs or customer data to train SAFE products.

Does SAFE use my data to serve other customers?

No, the data you submit and the responses you receive are used only to support your experience, and only processes data specific to the querying SAFE tenant. This data is not used to train models across customers or shared across customers.

Is any data transferred outside of my SAFE tenant

When using AI in the SAFE platform, data is transferred outside of the current site to third-party LLM providers in order to generate a response. Even though the data is transferred, it follows existing SAFE security practices and is not persisted. Each data request is sent to our LLM providers individually, over an TSL-encrypted service, to process and send back to the SAFE platform.

Can I disable AI in SAFE if my organization is not ready to use it?

Yes. If you are an admin, you can log into SAFE and go to “Advanced Settings” to manage AI usage in SAFE. Please note that where some products or features entirely rely on AI, this will result in them being deactivated. This includes SAFEs TRPM module with its integrated AI assistants and workflow processing.

How do user roles and access permissions apply to AI features?

SAFE AI has two main feature sets, with the following role management capabilities:

- SAFE X, our primary search and query tool, is accessible only to users with an Admin role.

- AI assistants are feature based, and permissions to access is granted in line with feature access as assigned by user role. E.g. TPRM AI assistants will only be available to users with access to TPRM features.

How are SAFE AI capabilities ensuring my data is protected?

SAFE AI is built with a security-first architecture to ensure your data remains private, controlled, and protected at all times. Key safeguards include:

- Secure Cloud Infrastructure

All customer-specific data is stored within SAFE's AWS infrastructure, protected by encryption at rest and in transit, and governed by ISO 27001 and SOC 2 Type 2 certified controls. - Data Residency and Encryption

All customer data is stored within AWS infrastructure, using encryption at rest and in transit. SAFE inherits AWS's security best practices while layering on additional access controls specific to AI workloads. - No Data Sharing or Training on Customer Data

SAFE enforces tenant-level isolation and does not use any customer data to train AI models, ensuring privacy and strict data boundaries. - AI Usage Governance

SAFE AI operates within defined guardrails—its usage is continuously monitored and governed by internal security policies to prevent misuse or overreach of AI capabilities. - Bias, Accuracy, and Fairness Reviews

SAFE conducts targeted evaluations of AI outputs to identify potential bias, validate accuracy, and uphold fairness in outcomes—aligned with regulatory expectations like GDPR and CCPA. - Transparent and Auditable Logs

All AI interactions are logged in a tamper-evident format to support traceability, internal investigations, and customer audit requirements.

Does SAFE AI respect data residency?

SAFE holds all customer data in the selected data region for the SAFE tenant. SAFE uses a diverse range of advanced LLMs to provide SAFE AI features which may not be available across all data regions. The current availability of supported LLMs are as follows:

- US Data Region - LLM processing occurs in the US

- EU Data Region - LLM processing occurs in the EU

- Rest of World - LLM processing occurs in the US

Does SAFE AI impact my compliance with GDPR?

We are committed to helping our customers stay compliant with GDPR and their local requirements. As we do today for the SAFE Platform, we will process and transmit data for SAFE AI in accordance with GDPR.

SAFE AI is designed to support GDPR compliance by operating within a framework that emphasizes data minimization, access controls, and transparency. SAFE AI does not independently collect personal data; it processes only the data provided by users within the platform's secure and controlled environment. Customers remain in control of their data and are responsible for ensuring that any personal data shared with SAFE AI complies with applicable legal requirements, including proper consent from data subjects where required.

Is SAFE AI compliant with SOC 2 and ISO 27001?

SAFE AI is developed using infrastructure, processes, and code that are governed by our SOC 2 Type 2 and ISO 27001 certified controls. These certifications demonstrate that our security and compliance practices meet industry standards for protecting data and ensuring operational integrity.

SAFE AI operates within the same secure development lifecycle, access controls, encryption standards, and monitoring systems that are independently audited under these frameworks. This ensures that the way we build and manage AI at SAFE reflects our commitment to enterprise-grade security and trust.

Is SAFE AI compliant with HIPAA?

SAFE AI is not designed to process Protected Health Information (PHI) and as such is not HIPAA compliant. Customers are advised not to submit healthcare or patient-related data to SAFE AI that falls within the context of HIPAA. SAFE does follow industry best practice around software design principles highlighted by HIPAA including Data Access Control, Encryption, Audit Trails, Data Security Protocols, Data Integrity and Software Updates/Maintenance.

Can I limit the data or restrict the data that is shared with SAFE AI?

Activating or deactivating AI lets you manage where AI experiences appear for your users.

If you have AI activated, SAFE AI features can access and use data on the SAFE Platform.

Disclaimer. SAFE does not represent, warrant or otherwise guarantee that the output generated by SAFE AI will be accurate, complete, or current. As such, you should independently review and verify all output as to usefulness, appropriateness, accuracy, fitness for its purpose, and any other quality relevant to your use cases and/or applications. You are solely responsible for the content of the input and its use of output.