Key Use Cases of AI in Risk Management

Fight fire with fire – the same sophisticated techniques can be turned against the attackers.

From AI-driven cyber risk reporting in natural language to predicting cyber attacks with machine learning to unmasking highly personalized phishing emails – it’s a brand-new threat landscape out there for CISOs and other risk and security managers, thanks to artificial intelligence (AI). The most common challenge they all face is the necessity to adapt quickly to incorporate AI while holding the line on cyber defense. This blog post will get you started.

Understanding AI in Risk Management

Let’s define some key terms:

- Artificial Intelligence (AI) equips computers to emulate human intelligence, such as recognizing patterns and solving problems.

- Machine Learning (ML) is a subset of AI that uses algorithms to analyze large data sets (Data Analytics). This allows machines to learn without human intervention.

- Deep Learning (DL), a subset of ML that uses neural networks to mimic the way biological neurons work to process information

- Generative AI (Gen AI) is a subset of deep learning models that are trained on large data sets to generate text, images or code

- Large Language Model (LLM) is an artificial intelligence program that uses Machine Learning to understand text, respond to queries and other forms of Natural Language Processing (NLP)

How AI is used in cybersecurity and cyber risk management.

With its capability to generate and act on analysis with a depth and speed beyond human ability, AI is poised to revolutionize information security. Here are a few examples:

- Natural-language reporting via GenAI on current threat landscape, controls status, top-risks assessment and more.

- Realistic threat simulation for training

- Adaptive responses to zero-day or APT attacks

- Identifying phishing emails through pattern recognition by Gen AI; rapidly adjusting as new forms of phishing emerge

- Enhancing Intrusion Detection Systems with Neural Networks to improve accuracy

- Neural Networks modeling risk assessments with likelihood and impact based on historical data

5 Benefits of AI for Risk Management

1. Communication:

Natural language output from Generative AI produces reporting on the status of a cybersecurity risk management program in text and graphics that breaks down communication barriers between the cyber team and stakeholders in the business.

2. Speed:

Real-time monitoring and analysis of patterns in cybersecurity data creates actionable insights at the speed of the threat environment and puts cyber defenders on par with attackers.

3. Automation:

Routine risk management tasks like ingestion of questionnaires from third-party vendors or processing alerts in a SOC can be automated. This can reduce time-spent and burnout, while increasing capacity in other verticals.

4. Data analytics:

Machine learning pushes identification of cyber threat indicators out beyond the human in terms of speed and reach,

5. Improved predictive analytics:

AI can infer when and how future attacks might happen suggesting ways to prioritize cybersecurity efforts.

Challenges in Implementing AI for Risk Management

With business rushing ahead on AI adoption, risk managers are struggling to keep up, both to assess the potential harm to the organization from AI internal use or from external threats – and to adopt AI tools themselves for defense and risk mitigation.

Some of the top-of-mind concerns for AI and risk management:

- Data privacy and security

AI applications gather enormous amounts of data and effectively increase the attack surface of any organization. This results in raising risks of data breaches. Further complicating the issue, AI tools, if trained on sensitive corporate information can inadvertently leak the information outside the company. Large data sets also raise a privacy issue. Personal data about individuals may be used without their consent – and even when data is anonymized, sophisticated AI systems can be used to re-identify individuals.

Facial recognition technology, now powered by AI, is a particular privacy issue. AI can conduct video surveillance, looking for suspicious behavior in public places to aid police. But add facial recognition and it’s a major privacy issue. At the 2024 Olympics, France permitted video surveillance but forbade facial recognition.

- Integration with existing systems and processes.

While in many ways new and strange, risk for AI systems can fit into established cyber risk management practices and processes. The National Institute of Standards and Technology, author of the popular Cybersecurity Framework (NIST CSF), has released an AI Risk Management Framework (AI RMF). The framework gives organizations an approach to artificial intelligence: Govern, Map, Measure, Manage.

Still, the AI RMF concedes that, unlike the CSF’s premise – add more cybersecurity technical controls to achieve a lower-risk state of “maturity” – AI is best understood in terms of risk scenarios with a range of probabilistic outcomes (an invitation to adopt cyber risk quantification).

- Need for skilled personnel to manage AI

The shortage of cybersecurity professionals has been well documented (4 million globally according to the World Economic Forum); it follows that the numbers of cybersecurity professionals who also have AI risk experience must be vanishingly small. Tech companies have high interest in “reskilling” employees to meet the GenAI future; 92 percent of jobs analyzed in a survey of technology and communications work are expected to “undergo either high or moderate transformation due to advancements in AI.” Some key skills expected to be in demand (each needing a security component):

- How to build a chatbot

- How to train a foundation AI model

- Ensuring integrity and reliability of an AI application

- Knowledge of generative AI architectural patterns

While AI may widen the skills shortage, consider this: If AI also eliminates routine work in cybersecurity, like the SOC analyst deluged with alerts, there may be plenty of time for re-skilling to higher level functions in AI security.

- Potential for bias in AI algorithms

Drugstore chain Rite Aid was banned from using AI for 5 years by the US Federal Trade Commission after an AI implementation for facial recognition was found to be biased toward identifying persons of color and women as shoplifters. Amazon had to drop a LLM application that discriminated against women job applicants because it compared their resumes with resumes of its heavily male workforce.

Bias can infect AI systems through

- Biased training data that might be overweight in data on white males

- Biased algorithms that overweight some factors over others

- Cognitive bias that may unconsciously influence AI systems

Developers of large language models should actively check their datasets for bias, just as they would other errors.

Expert Tip: AI Systems Require Constant Vigilance

Unlike traditional software, a GenAI application can “drift”, essentially morphing in reaction to its own feedback loop. The fundamental problem with AI systems is that they are “black boxes” – nobody knows with certainty what’s going on inside. They are prone to “hallucinate,” producing false but believable results. Risk management must include ongoing audits of AI model performance.

Use cases of AI in Cyber Risk Management

Chatbot-style Interface for Risk Reporting

LLM’s and Generative AI make possible a conversational relationship with risk management applications, much like the popular LLM ChatGPT. A user can query the LLM, for instance about the status of controls or top risks and receive insightful responses, even practical advice on steps to reduce loss exposure, in text or graphics.

Ingest Security Documentation

From SOC 2 reports to NIST CSF maturity scores to asset inventories, cybersecurity teams are faced with trying to make sense of disparate forms of reporting on cybersecurity metrics arriving in different formats. With their enormous ability to ingest text and data, LLMs can rapidly collate into easily digestible reports. A Generative AI tool can greatly reduce time to assess a security questionnaire response from a supply chain partner, a task typically done manually now.

Cybersecurity Threat Detection

AI is revolutionizing threat intelligence and threat detection. Current methods leverage heuristics to look for signatures of known threat actors. AI tools with machine learning can scan huge amounts of data in real time, quickly spotting abnormal behavior – even a never seen zero day.

Predictive Models

One of the great promises of artificial intelligence and neural networks: not just detection but active response to threats. For instance, predictive analytics by neural networks might analyze from thousands of distributed IoT devices to spot red flags that could indicate vulnerabilities or ongoing compromise. Another promising use: Simulating attack scenarios that cyber defenders have never seen (or thought of) before.

Phishing Detection

AI-powered phishing defense acts on several levels:

- Textual analysis of emails identifies common red flags.

- Domain analysis spots fake senders.

- URL analysis identifies phishing websites.

And AI could quickly pick up on user behavior that signals a compromised account like querying for large amounts of data.

Bot Identification

AI can be trained to spot bots by repeated actions in short periods, device fingerprinting, watching for traffic spikes and running IP lookups – all in real-time to identify bot threats.

Incident Response

One of the great promises of AI in cybersecurity is a self-healing system – detecting a threat and immediately putting shields up, blocking malicious IP addresses or shutting down compromised user accounts, greatly reducing incident response time.

Securing networks

AI systems assess network traffic identifying patterns that may indicate the presence of an attacker. AI watches over email, endpoints and cloud apps in extended detection and response (XDR) solutions or makes sense of the incoming data in security in information and event management (SIEM) solutions. Next-generation firewalls use threat intelligence to rapidly adapt to changes in the threat landscape. AI can also be deployed to automate patch management or enforcement of security policies across the network

Mitigate insider threats

AI systems can also detect signatures of insiders gone wrong, such as attempts to get past security controls and export a large amount of data off the network. But AI has also introduced a new form of insider threat – non-malicious abuse of AI systems. Examples:

- Leaking company-sensitive information via an open-source LLM Models such as ChatGPT.

- Using training data that they have not gotten the appropriate permissions to use to train the model.

- Training the model without using the appropriate safeguards to ensure the integrity of the outcomes is not corrupted by bias with data inputs.

- “Poisoning” – deliberating feeding the model incorrect information.

Mitigating risk from insiders in the AI era requires assessing a new set of risk scenarios, though quantitative techniques can still be applied.

AI-Powered Third-Party Risk Management (TPRM)

AI in third party cyber risk management can play several important roles. A Generative AI tool can greatly reduce time to assess a security questionnaire response from a supply chain partner, a task typically done manually now. For critical vendors, best practice is to apply the highest level of scrutiny: continuous inside-out risk assessment of their controls and your vendor-facing first-party controls, with data conveyed through APIs and assessed in real time by AI.

How SAFE Can Help with Your Risk Management

Organizations that prioritize security from the outset will be able to accelerate AI adoption, while those that neglect it stand to face significant challenges. But security and risk teams need to prove their value to their organizations by reacting with agility and speed to both the threat and opportunity of artificial intelligence.

At SAFE, we’re standing by to help CISOs handle both sides of the AI challenge. We offer AI-driven, risk-based functionality for:

- Real time ingestion and analysis of telemetry on threats and controls from our customers.

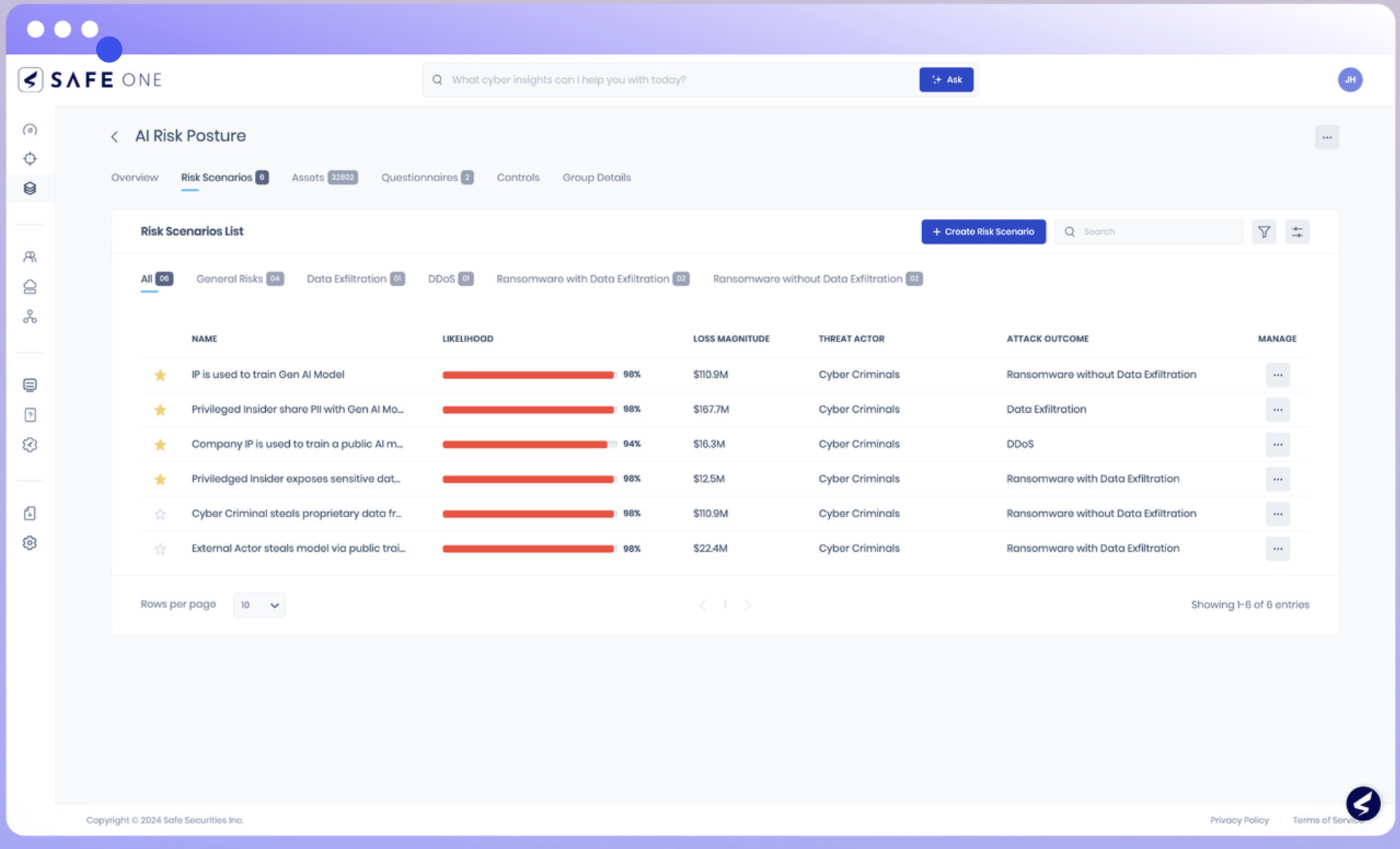

- AI risk scenario creation to feed quantitative cyber risk analysis in financial terms

- Quantitative analysis using AI for predictive analytics and Monte Carlo simulation to generate frequency and loss magnitude values

- Role-specific “next best steps” suggested in natural language through GenAI

- Natural language answers to questions (“What are our top risks?”)

- Third-party risk management with LLM analysis of questionnaires

SAFE offers a complete solution for quantitative analysis of AI-related risk, based on FAIR. FAIR is the international standard for cyber risk quantification, that outlines how to manage GenAI risk in a scalable and efficient manner.

To get the complete details on AI risk management with SAFE – read the white paper: