What Is Agentic AI in Cybersecurity

How to counter the threats and leverage the opportunities of the cutting-edge of artificial intelligence

Ready or not, AI has arrived in cybersecurity for both attackers and defenders in the form of AI agents (aka agentic AI or autonomous AI) capable of self-direction in seeking out and exploiting vulnerabilities, maneuvering around controls and even negotiating for ransom on the attack side, or identifying and containing attacks as they develop on the defense side.

In this post, we’ll cover the urgent AI risk management issues that cybersecurity leaders are confronting right now.

What Is Agentic AI?

Agentic AI is a type of artificial intelligence that uses specialized agents, working in tandem to complete a wide range of tasks in an “agentic workflow” – much like a self-driving car.

Let’s take an example of an autonomous, AI-driven solution for third-party risk management (TPRM). Onboarding a new vendor is typically a lengthy process for a risk management team, with many steps such as ingesting a security questionnaire and controls scans of the vendors, checking a contract or the vendor’s list of certifications. Agents trained to accomplish each task can combine efforts and move through all the tasks in minutes.

For a CISO or third party risk management program leader, that means a solution that can rapidly scale without adding headcount. For a TPRM analyst that means eliminating low-value, manual work to focus on what matters most.

“AI agents – that’s the beginning of an unlimited workforce,” Salesforce CEO Marc Benioff has said.

But workforce enhancement is just part of what AI agents can accomplish. Agents can also get creative with autonomous actions such as

–Learning from feedback and self-correcting

–Applying software tools as needed to complete tasks

–Planning out tasks on their own to accomplish an end without human intervention

–Engaging in “multi-agent collaboration,” leveraging a force multiplier effect.

Now, throw in the human factor, with AI in the role of collaborator. An emerging field is trying to understand human-AI interaction. As the NIST Artificial Intelligence Risk Management Framework calls AI systems “inherently socio-technical in nature, meaning they are influenced by societal dynamics and human behavior.”

LLMs vs Bots vs Agentic AI – What’s the Difference?

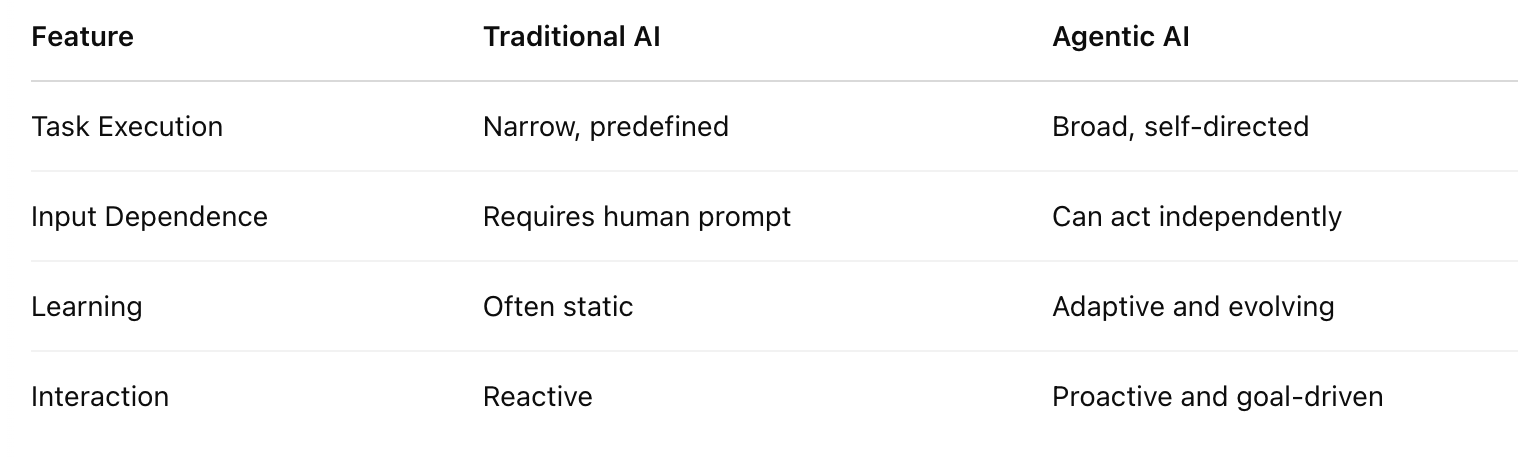

Agentic AI represents a significant leap beyond Large Language Models (LLMs) and traditional software bots by exhibiting autonomous decision-making and goal-oriented behavior. Unlike LLMs, which primarily generate text based on prompts, agentic AI can independently formulate goals, plan multi-step actions, and execute them to achieve those objectives with minimal human oversight.

Bots typically follow pre-defined rules or scripts, whereas an agentic AI system learns from its environment, adapts its strategies, and can proactively take initiative, making it a more dynamic and capable form of artificial intelligence.

Traditional AI vs Agentic AI

Agentic AI in the Cybersecurity Space

Those same traits of autonomy and collaboration make AI agents formidable players in the complex, high speed environment of cybersecurity. Let’s take a look at each team, attackers and defenders.

How Can Organizations Use Agentic AI for Cybersecurity Defense?

“As cyber threats grow in sophistication and scale, defenders must leverage AI to enhance their cyber risk management and resilience strategies,” SAFE advises. Among the new defensive capabilities that AI offers:

- Automated Security Hygiene

This can include self-healing code, self-patching systems, and continuous attack surface monitoring, which will enhance security while reducing workload for the security team.

- Autonomous and Deceptive Defense Systems

XDR and SOAR systems use real-time analytics to respond to threats while continuously learning from telemetry to anticipate new threats.

- Augmented Oversight and Reporting.

For example, AI-powered risk quantification tools can identify new vulnerabilities and estimate their probable impact, helping organizations stay ahead of emerging threats.

Benefits of Agentic AI for Cybersecurity Teams

Agentic AI represents a leap forward in artificial intelligence, surpassing traditional reactive models by introducing autonomy, adaptability, and goal-oriented behavior. In cybersecurity, these advancements unlock capabilities that elevate both defensive strategies and operational resilience.

Enhanced Adaptability and Efficiency

Agentic AI can rapidly adapt to evolving threat environments and streamline security operations. By automating multi-step tasks—such as threat triage, vendor onboarding or vulnerability management—it significantly accelerates decision-making and reduces manual load on security teams. This autonomy leads to faster containment, fewer escalations, and lower response costs.

Context Awareness

With a deep understanding of behavioral baselines and asset importance, agentic AI offers tailored, context-aware security actions. For example, it may treat an anomaly on a developer’s laptop differently than the same anomaly on a finance team’s server. This nuance mimics human decision-making and enhances both detection accuracy and response quality.

Informed Decision-Making

Agentic systems ingest and process vast volumes of threat intelligence, telemetry, and behavioral signals in real time. They can feed risk assessments, identify attack paths, and suggest prioritized actions—enabling security leaders to make more informed, timely, and confident decisions.

Challenges of Running Autonomous AI for Cybersecurity

“Many AI agents are vulnerable to agent hijacking,” a recent blog post from NIST’s U.S. AI Safety Institute says. Agent hijacking happens when an attacker inserts malicious instructions into data that may be ingested by an AI agent, also called “indirect prompt injection”. By doing this, the hacker can prompt the AI agent to take harmful actions and turn against its human handlers.

Once on the loose inside a network, it could perform the attack methods cited above, such as fast spread and infection by malware. The study urges frequent red-teaming to keep up with the mutating tactics of AI-powered threat actors. For now, AI cybersecurity experts advise the use of agentic AI on low-risk systems or processes.

More challenges on the AI horizon that organizations should proactively address:

Trust and Transparency

Agentic systems may take actions that aren’t easily explainable—such as auto-isolating devices or modifying security policies—making it difficult to trace decision logic or justify actions during audits. Ensuring explainability and maintaining an audit trail is essential, especially in regulated environments.

Alignment with Security Objectives

Because agentic AI is goal-driven, misaligned objectives or poorly defined constraints can lead to unintended behaviors. For instance, if an AI’s goal is to reduce alert volume, it might suppress low-severity alerts that later prove to be early indicators of compromise.

Human-AI-Collaboration

Striking the right balance between autonomy and human oversight is a challenge. Over-automated systems risk acting without sufficient context, while under-automated ones fail to realize their full potential. Human-in-the-loop architectures are key to effective deployment.

Third-Party and Supply Chain Risks

Vendors embedding agentic AI into their platforms may introduce opaque logic or autonomous decision-making that your security team can’t easily audit or control. Risk management strategies must evolve to cover runtime monitoring, policy guardrails, and behavioral transparency of AI-powered agents in the supply chain.

Assessing AI-Driven Risk Scenarios with SAFE x FAIR

The FAIR Institute, alongside our SAFE team, developed the FAIR-AIR Approach Playbook. The playbook is a tool that CISOs can use to think through the risks created by artificial intelligence in a structured way, including cyber risk quantification with the FAIR model.

The Playbook identifies five key steps in approaching AI risks:

- Contextualize – You should understand the five vectors of risk for your organization, namely Shadow GenAI, Creating Your Own Foundational LLM, Hosting on LLMs, Managed LLMs and Active Cyber Attack

- Focus on your likely risk scenarios for each of the five vectors using the threats/assets/effects approach of Factor Analysis of Information Risk

- Quantify the risk in FAIR terms for probable frequency and magnitude of loss. Example:

“There is a 5% probability in the next year that employees will leak company-sensitive information via an open-source LLM Model (like chat GPT), which will lead to $5 million dollars of losses.“

- Prioritize/treat the top risk scenarios. To clarify decisions, identify the key drivers behind the risk scenarios. Example: For the Active Cyber Attack Vector, a risk driver could be phishing click rate among employees with access to large amounts of sensitive data.

- Assess findings, set a response for the organization, based on return on investment.

SAFE is collaborating with Databricks to combine the power of FAIR-AIR and the Databricks AI Security Framework to provide detailed and specific risk scenario modeling that accurately reflects the complexity and dynamics of AI technologies.

Agentic AI – What Is the Opportunity for CISOs

The joint research paper on Agentic AI by SAFE concludes with a plea that CISOs should take this as an opportunity to step up their role in the organization as business leaders.

“To confront this new era, organizations must move beyond reactive security strategies. AI-powered cybersecurity tools alone will not suffice.”

The researchers recommend “a proactive, multi-layered approach” that combines:

- Human oversight

- New governance frameworks

- AI threat simulations and quantitative cyber risk assessments

- Real-time threat intelligence gathering and sharing.

“The ability to anticipate and disrupt AI-driven attacks before they materialize will separate leaders from those left vulnerable in the wake of machine-speed threat,” the report concludes.

If you want to dive deeper into the topic, check our: CISOs Guide to Managing GenAI Risks