Identify, quantify and prioritize AI-related risks and build them into your decision-making process

By Wes Hendren

The age of Generative Artificial Intelligence (GenAI) has officially taken the world by storm. It’s not just knocking politely on your door—it’s barging in like an overly enthusiastic party guest, ready to set up shop in your living room, conference room, and C-suite.

Much like electricity at the start of the 20th century, Generative AI is quickly becoming indispensable, fundamentally reshaping every industry in its path. But with great power—and let’s be honest, some really cool new toys—comes great responsibility.

Generative AI isn’t just a shiny gadget with endless possibilities. It also introduces an entirely new bundle of risks that can catch businesses off-guard. The solution? Rather than slamming the brakes on innovation (or making a run for it with your hair on fire), organizations need a measured, responsible, and forward-thinking approach.

In short: identify, quantify and prioritize your new, AI-related risks and build them into your decision-making process.

If you’re looking to stay ahead in 2025, here are five Generative AI risks you absolutely, positively need to address—yesterday.

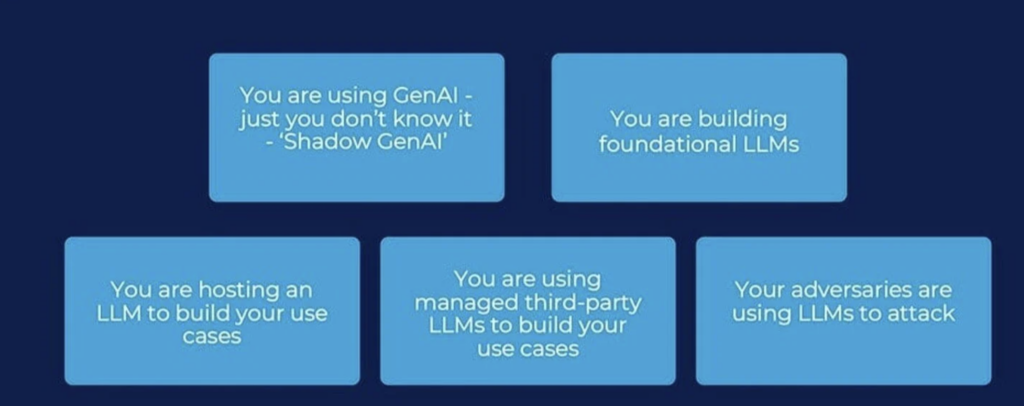

1. Shadow Generative AI: The Stealthy Risks Lurking in Your Hallways

The Threat

Remember when your kids first discovered the internet and you found out they’d been buying random apps on your phone? The same sort of mischief is happening in workplaces worldwide. Employees are using Generative AI tools—like ChatGPT, Bard, or DALL·E—without oversight or formal approval. This can lead to inadvertent leaks of sensitive data or decisions made on AI-generated misinformation.

The Impact

Picture this scenario: An employee uses a free AI tool to summarize confidential contracts. Bam! All that proprietary data is potentially accessible to third-party services. Even scarier? A breach could cost you millions in fines, lawsuits, and lost revenue.

The Solution

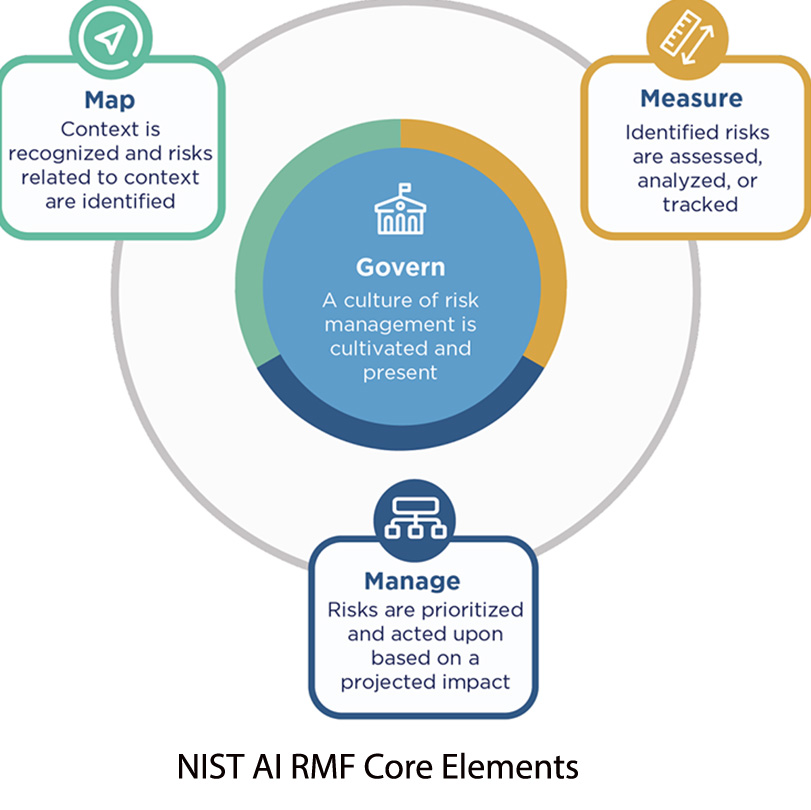

- Create a Policy Framework: Spell out how employees should (and shouldn’t) use AI tools. The NIST AI RMF Playbook is a good starting point for policy and governance development.

- Monitor & Educate: Offer vetted AI alternatives and continuously train your workforce on safe usage.

- Set Clear Boundaries: Ensure employees know what data is too sensitive to share, even with “trusted” tools.

- Identify Your AI-related Risk Scenarios – the FAIR Institute’s FAIR-AIR Playbook gives guidance on understanding your likely risk scenarios. You can move on from there to strengthen relevant cybersecurity controls.

2. Foundational LLM Risks: Building on Quicksand Instead of Solid Rock

The Threat

When companies develop their own large language models (LLMs), they sometimes skip the “fine print” (like robust safety controls or top-quality training data). The result can be a model that’s biased, inaccurate, or downright dangerous.

The Impact

One disastrous decision based on faulty AI output can trigger massive brand damage or invite regulatory penalties. (No one wants to star in the next viral headline like this: “What Air Canada Lost In ‘Remarkable’ Lying AI Chatbot Case).

The Solution

- Embrace FAIR™: The Factor Analysis of Information Risk standard – used in conjunction with FAIR-AIR – helps quantify your AI-related risk scenarios for probable likelihood and business impact.

- Prioritize SAFEguards: Don’t roll out your fancy new LLM without ensuring it’s safe, secure, and ethically trained.

- Practice Continuous Monitoring: Think of your LLM like a toddler with a box of crayons; you need to keep an eye on it before it colors all over your brand’s walls.

3. Hosting on LLMs: The Integrity Issues Hiding in Plain Sight

The Threat

Hosting LLMs can be like hosting a foreign exchange student who doesn’t quite speak your language yet. When organizations don’t define success metrics or properly tune their models, the AI’s outputs can quickly spiral into unpredictability—or worse, cause real harm.

The Impact

Imagine a financial institution relying on an untuned AI model for risk assessments. If that model’s data is off, it might as well be picking stocks using a Magic 8-Ball. The result? Damaged client trust, lost assets, and an uncomfortably high headline on your next risk report.

The Solution

- Shared Responsibility: Recognize that both you and your hosting provider need to set the guardrails.

- Clear Metrics: Define exactly how you’ll measure success—accuracy, bias levels, compliance thresholds, you name it.

- Stress Testing: Validate AI outputs under different conditions (like simulating a market crash) to see if it behaves or goes rogue.

Five Vectors of AI Risk

4. Managed LLMs: Trust, but Verify

The Threat

Outsourcing AI development may seem like a shortcut to success—until it isn’t. If your third-party vendor cuts corners, your sensitive data could end up as the main course in a cybercriminal’s buffet.

The Impact

A single “prompt injection” or vendor slip-up can lead to massive breaches. Guess whose reputation suffers? Spoiler: it’s not the vendor’s logo splashed across the headlines. It’s your company that gets the public shame and financial fallout.

The Solution

- Rigorous Vendor Audits: Make sure your partners adhere to the same ironclad standards you do. The FAIR Third-Party Assessment Model (FAIR-TAM) can help you tier your third-party vendors for the likely risk exposure they present.

- Encryption & Access Controls: Secure your data like it’s the crown jewels—because, in today’s digital age, it is.

- Monitor, Monitor, Monitor: Continuous oversight is crucial, because trust is great… but verification is a lifesaver.

5. Active Cyber Attacks: AI as Your Frenemy

The Threat

AI isn’t just for the good guys. Cybercriminals have also discovered Generative AI—and they’re using it to craft disturbingly personalized phishing attacks and sniff out vulnerabilities at lightning speed.

The Impact

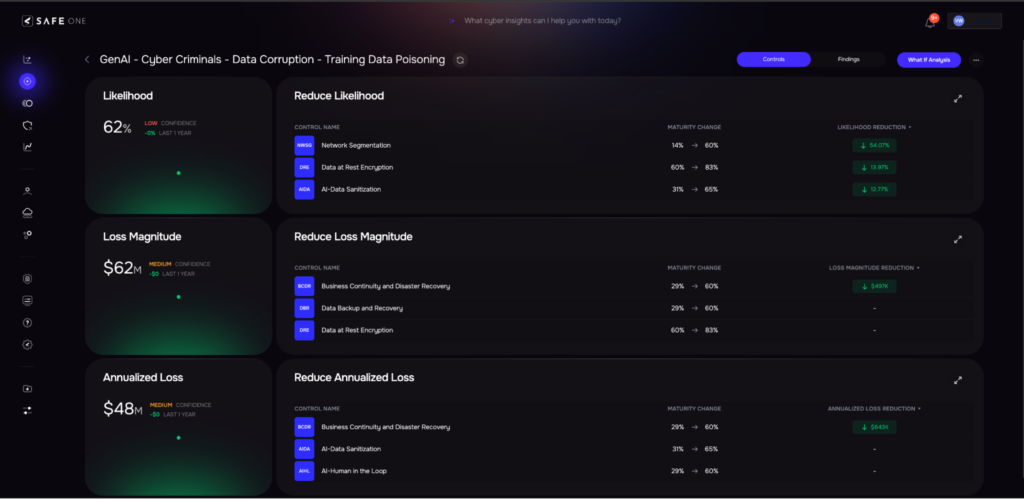

Sample analysis output, active cyber attack risk scenario:

- Enhanced phishing attacks lead to breach

- Key risk driver: phishing click rate among employees with access to large amounts of sensitive data.

- 40% probability of $350 million loss

Yes, you read those possible numbers right. In short, ignoring AI-fueled cyber threats is like leaving your front door unlocked with a giant neon sign that says “Come on in!”

The Solution

- Security on Steroids: Employ AI tools to defend against AI-driven attacks.

- Continuous Education: Run phishing simulations and upskill your team. Everyone is part of your cybersecurity line of defense (even that guy in accounting who still uses “password123”).

- Real-Time Monitoring: Use advanced threat detection to catch and quash attacks before they do real damage.

Why Quantifying AI Risks Is a Game-Changer

Modern risk management isn’t about guessing or crossing your fingers—it’s about translating uncertainty into hard financial metrics. Models based on the FAIR standard let you measure probable loss event frequency and magnitude. Once you speak the CFO’s language (a.k.a. numbers), you can make better strategic decisions that align with your business priorities.

When you can put a price tag on a cyberattack or a potential data leak, it suddenly becomes a lot clearer where to funnel your limited resources and time.

2025: The Year of Responsible AI

Generative AI isn’t the villain of the story; it’s a groundbreaking tool that can propel your business lightyears ahead—if you manage it responsibly.

The enterprises thriving in 2025 will be the ones that embrace AI with open arms and a well-structured playbook. The future belongs to those who measure their risks, prioritize countermeasures, and lead with a bold vision.

So, are you ready to transform Generative AI from a potential hazard into your next strategic advantage?

Your move…

Quantitative risk management for AI with SAFE One

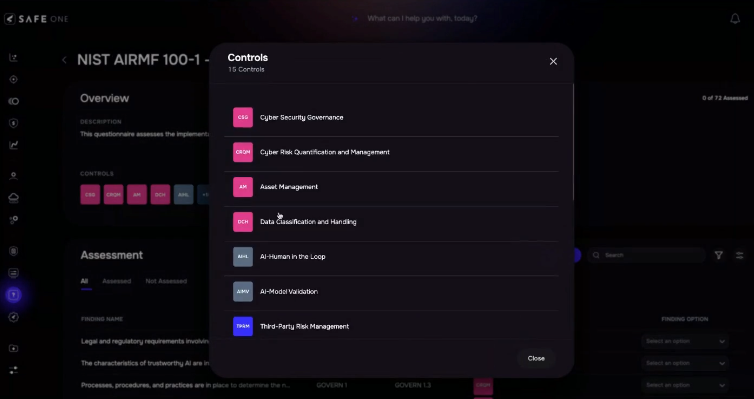

NIST AI RMF compliance with SAFE One

The SAFE One platform’s GenAI Risk Module provides a complete solution to GenAI risk management:

- AI Risk Quantification

- Scenario-Based Risk Modeling

- Automated Risk Scenario Information

- AI Posture Evaluation

- Real-Time Risk Monitoring and Updates

- All based on the FAIR standards.

See SAFE GenAI Risk in action. Schedule your 1:1 demo with a SAFE cyber risk expert today.